--------------------------------------------------

It is crazy to think that in the bad old days, ministers who perhaps knew very little about economics were trusted to make important decisions about such matters as spending and taxation. It was some improvement when the power to set interest rates was transferred to central bankers. But the real breakthrough came when computers became good enough to manage the economy more efficiently than people. The supercomputer Greenspan Two, for example, ran the US economy for twenty years, during which time growth was constant and above the long-term average; there were no price bubbles or crashes; and unemployment stayed low.

Perhaps unsurprisingly, then, the leader in the race for the White House, according to all the (computer conducted and highly accurate) opinion polls is another computer—or at least someone promising to let the computer make all the decisions. Bentham, as it is known, will be able to determine the effects of all policies on the general happiness of the population. Its supporters claim it will effectively remove humans from politics altogether. And because computers have no character flaws or vested interests, Bentham will be a vast improvement on the politicians it would replace. So far, neither the Democrats nor the Republicans have come up with a persuasive counter-argument.

Baggini, J., The Pig That Wants to Be Eaten, 2005, p. 274.

---------------------------------------------------

Given the dangerous and discouraging ineptitude of Republican, Democrat, Tory, and Labour party politicians (to name just a few), we might happily jump at the chance to elect a computer. Heck, Mark Zuckerberg might be smart to try this during the next election. If you're reading this blog, you probably have a lot of comfort with computers making decisions, but before we happily replace Donald Trump with one, let's look at the sticking point that Baggini would like us to consider in this experiment. Here is his own discussion on it:

The idea of letting computers run our lives still strikes most of us as a little creepy. At the same time, in practice, we trust ourselves to computers all the time. Our finances are managed almost entirely by computers, and nowadays many people trust an ATM to log their transactions accurately more than a human banker. Computers also run light railways, and if you fly you may be unaware that for long periods the pilots are doing nothing at all. In fact, computers could easily handle landings and take-offs: it's just that passengers can't yet accept the idea of them doing so. ... Dispensing with humans altogether is not so easy. The problem is that the goals need to be set for the computer. And the goal of politics is not simply to make as many people happy as possible. For example, we have to decide how much inequality we are prepared to tolerate. One policy might make more people happy overall, but at the cost of leaving 5 percent of the population in wretched conditions. We might prefer a slightly less happy society where no one has to live a miserable life. A computer cannot decide which of these outcomes is better; only we can do that. ... The day may well come, perhaps sooner than we think, when computers will be able to manage the economy and even public services better than people. But it is harder to see how they could decide what is best for us and send all politicians packing forever.

Okay, so it must be said that when we want to replace politicians with computers, what we really mean is that we want to replace them with algorithms written by computer programmers. It would be circular to try to have computers determine the goals of those algorithms on their own, but what ultimate goals should we tell the programmers to accomplish? The single most important point of this evolutionary philosophy website is to argue that the survival of life in general over the long term of evolutionary timelines is the objective goal that forms the basis of what we ought to act towards, but that hasn't been broadly accepted yet. Instead, academic philosophers would probably vote right now that the best candidate for a goal to give computer programmers would be some form of utilitarianism where the goal would be to "maximize well being." I hate to rehash my whole long-running argument that "well being" is subjective until it is grounded in the objective fact that to have well-being you must first have existence, and that requires the survival of life, but this is a big reason why the computer for president just doesn't work at the moment. For instance, the name for the computer in this thought experiment is inspired by Jeremy Bentham (1748-1832), presumably because of his felicific calculus, which is "an algorithm for calculating the degree or amount of pleasure that a specific action is likely to cause. [And since Bentham was] an ethical hedonist, he believed the moral rightness or wrongness of an action to be a function of the amount of pleasure or pain that it produced. ... The felicific calculus could, in principle at least, determine the moral status of any considered act."

Sounds good in theory, but what does this look like in practice?

Bentham included seven variables in his calculations:

- Intensity: How strong is the pleasure?

- Duration: How long will the pleasure last?

- Certainty or uncertainty: How likely or unlikely is it that the pleasure will occur?

- Propinquity or remoteness: How soon will the pleasure occur?

- Fecundity: The probability that the action will be followed by sensations of the same kind.

- Purity: The probability that it will not be followed by sensations of the opposite kind.

- Extent: How many people will be affected?

And then he gave the following instructions on how to use these variables:

"To take an exact account of the general tendency of any act, by which the interests of a community are affected, proceed as follows. Begin with any one person of those whose interests seem most immediately to be affected by it: and take an account,

- Of the value of each distinguishable pleasure which appears to be produced by it in the first instance.

- Of the value of each pain which appears to be produced by it in the first instance.

- Of the value of each pleasure which appears to be produced by it after the first. This constitutes the fecundity of the first pleasure and the impurity of the first pain.

- Of the value of each pain which appears to be produced by it after the first. This constitutes the fecundity of the first pain, and the impurity of the first pleasure.

- Sum up all the values of all the pleasures on the one side, and those of all the pains on the other. The balance, if it be on the side of pleasure, will give the good tendency of the act upon the whole, with respect to the interests of that individual person; if on the side of pain, the bad tendency of it upon the whole.

- Take an account of the number of persons whose interests appear to be concerned; and repeat the above process with respect to each. Sum up the numbers expressive of the degrees of good tendency, which the act has, with respect to each individual, in regard to whom the tendency of it is good upon the whole. Do this again with respect to each individual, in regard to whom the tendency of it is bad upon the whole. Take the balance which if on the side of pleasure, will give the general good tendency of the act, with respect to the total number or community of individuals concerned; if on the side of pain, the general evil tendency, with respect to the same community."

Do you think any computer programmer or mathematician could come up formulas and values to accurately and comprehensively model all of this?? Of course not!! And even if they did, we already know that the goal of "maximizing well-being" leads to the Repugnant Conclusion (i.e. 100 billion mostly miserable people > 1 billion happy people), which is an Achilles' heel of utilitarianism that I wrote a long post about. In that post, I listed many of the principles in The Black Swan about robustness and fragility that can be used to guide actions into an unknown future. Those would be important parameters for the algorithm in this week's thought experiment, but rather than reiterate them, perhaps an example of when we've ignored them in a computer model would be more emotionally instructive. Especially since it went horribly wrong and we all paid for it.

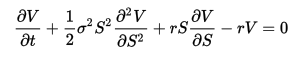

The predictably tragic story of human hubris was told in the BBC documentary, Trillion Dollar Bet: The Black-Scholes Formula. It's "the story of one of finance’s greatest formulas, the Black-Scholes option pricing model, which won two of its developers the prestigious Nobel Prize. It explores how the hedge fund which they founded, Long Term Capital Management, which promised to generate large returns with low risk through mathematical models spectacularly blew up and had to be bailed out by a consortium of banks to avoid systemic risk in the financial markets." It's a well told story that's worth the 45-minute viewing time if you click on the link above, but here are a few choice quotes:

"Traders are convinced that success in the markets is all to do with human judgment and intuition. Qualities that could never be reduced to a formula. However, this view has powerful opposition. An important group of academics who study the markets mathematically believe that such success is largely a matter of luck."

"The formula that Black, Scholes, and Merton unleashed on the world in 1973 was sparse and deceptively simple. Yet this lean mathematical shorthand was the fulfiment of a 50-year quest. Here was a formula, which could, it seemed, get rid of risk in the financial markets. Academics marveled at its breathtaking insights and its sheer audacity."

"By allowing them to hedge their risks constantly, the traders could feel safe enough to conduct business on a scale they had never dreamt possible. The risks in stocks could be hedged against futures. Those in futures against currency transactions. And all of them hedged against a panoply of new complex financial derivatives, many of which were expressly invented to exploit the Black-Scholes formula. The basic dynamic of the Black-Scholes model is the idea that through dynamic hedging, we can eliminate risk. So we have a mathematical argument for trading a lot. What a wonderful thing for exchanges to hear! The more we trade, the better off society is because of the less risk there is. ... Then the inventors of it decided to make money for themselves."

The first few years of Long Term Capital Management went spectacularly well, but that just added to the magnitude of the eventual downfall that was entirely predictable. When a series of "fluke events" in 1998 brought about things "that had never been seen before," the Black-Scholes model no longer worked and it led to incredible losses. The hedge fund run by these Nobel Prize winners owed one-hundred billion dollars and exposed America's largest banks to more than $1 trillion in default risks. The federal government felt forced to organize a bailout. This one didn't directly cost taxpayers any money, but fees, interest rates, and market returns changed negatively for everyone and the precedent of "too big to fail" had been set, which led to 2008's housing crisis, another scapegoat-free bailout, and the strong likelihood that we are on our way to yet another such event. We should know better, but the movie ends with a telling quote about why we don't:

"The beautiful Black-Scholes formula continues to be used millions of times a day by traders who know when to trust it and when to turn instead to their own intuition, by people who understand that the financial markets are places full of dangers and mysteries that have not yet been reduced to a scientific explanation. ... There's one thing that's clear. Over the last several hundred years, we've been able to identify some people who can do it better than others. They don't necessarily go to MIT, they don't necessarily have degrees in mathematics, though that doesn't automatically rule them out. They're the kind of people that can make that judgment that says, "something's different here. I'm going back to harbor until I figure it out." Those are the kind of people you want running your money. But there is a tempting and fatal fascination with mathematics. Albert Einstein warned against it. He said, "Elegance is for tailors. Don't believe in something because it's a beautiful formula."

Alas, we still believe. Partly because it is tempting to ignore our ignorance, and partly because powerful interests make short-term fortunes that they spend convincing us that we should believe. And that, to me, is the real takeaway from this week's thought experiment. Even though an autogovernment could certainly help us model complexity and act towards agreed-upon goals, we must never mistake such contingent knowledge for complete clairvoyance.