---------------------------------------------------

The booth of the clairvoyant Jun was one of the most popular in Beijing. What made Jun stand out was not the accuracy of her observations, but the fact that she was deaf and mute. She would insist on sitting behind a screen and communicating by scribbled notes, passed through a curtain.

Jun was attracting the customers of a rival, Shing, who became convinced that Jun's deafness and muteness were affectations, designed to make her stand out from the crowd. So one day, he paid her a visit, in order to expose her.

After a few routine questions, Shing started to challenge Jun's inability to talk. Jun showed no signs of being disturbed by this. Her replies came at the same speed, the handwriting remained the same. In the end, a frustrated Shing tore the curtain down and pushed the barrier aside. And there he saw, not Jun, but a man he would later find out was called John, sitting in front of a computer, typing in the last message he had passed through. Shing screamed at the man to explain himself.

"Don't hassle me, dude," replied John. "I don't understand a word you're saying. No speak Chinese, comprende?"

Source: Chapter 2 of Minds, Brains, and Science by John Searle (1984)

Baggini, J., The Pig That Wants to Be Eaten, 2005, p. 115.

---------------------------------------------------

The changes that Baggini made to the original experiment don't actually introduce any new concepts to discuss, so we can look directly at the huge literature that already exists on this problem. The Chinese Room was meant to "challenge the claim that it is possible for a computer running a program to have a 'mind' and 'consciousness' in the same sense that people do, simply by virtue of running the right program." This is an attack against both functionalism and the so-called Strong Artificial Intelligence position.

Functionalism, in philosophy of mind, arose in the 1950s. In this view, having a mind does not depend on having a specific biological organ such as a brain, it simply depends on being able to perform the functions of minds, such as understanding, judging, and communicating. As Baggini explains in his discussion of this experiment: "Jun's clairvoyant booth is functioning as though there were someone in it who understands Chinese. Therefore, according to the functionalist, we should say that understanding Chinese is going on."

Contrasting such functionalism, Searle instead holds a philosophical position he calls biological naturalism: i.e., that consciousness and understanding require specific biological machinery that is found in brains. He believes that "brains cause minds and that actual human mental phenomena [are] dependent on actual physical–chemical properties of actual human brains. Searle argues that this machinery (known to neuroscience as the 'neural correlates of consciousness') must have some (unspecified) 'causal powers' that permit the human experience of consciousness."

To see the full strength of Searle's arguments, you also have to know the difference between syntax and semantics. Syntax is concerned with the rules used for constructing, or transforming the symbols and words of a language, while the semantics of a language is concerned with what these symbols and words actually mean to the human mind in relation to reality. Searle argued that the Chinese Room thought experiment "underscores the fact that computers merely use syntactic rules to manipulate symbol strings, but have no understanding of meaning or semantics."

There have been many, many criticisms of Searle's argument already, which the SEP entry has divided into four categories. Let's take them in order of interest, and I'll try to add some of my own thoughts along the way as I build towards a potential overall solution to Strong AI.

The first of the standard type of replies involves claims that the Chinese Room scenario is impossible or irrelevant. It's impossible because such a one-to-one rule-based book for language responses would have to be infinitely large to actually work. For example, the number of ways to arrange 128 balls in a box (10 ^250) exceeds the number of atoms in the universe (10 ^80). By extension, the number of ways you could combine thousands of characters in the Chinese language would be incalculably large for today's computers. But this is a philosophical thought experiment, they are always hypothetical in nature, so practical impossibilities aren't a problem.

Even so, other critics claim it is irrelevant no matter how successfully the Chinese Room was built. They point out that we never really know if another person "understands" or just acts as if they do, so why try to hold the Chinese Room to these standards. Pragmatically, it just doesn't matter. Searle called this the "Other Minds Reply," but Alan Turing noted this thirty years earlier and called it "The Argument from Consciousness." Turing noted that "instead of arguing continually over this point it is usual to have the polite convention that everyone thinks. The Turing test simply extends this 'polite convention' to machines. He doesn't intend to solve the problem of other minds (for machines or people) and he doesn't think we need to." The same might be said for the Chinese Room, except evolution and comparative anatomy dissolves this "other minds" problem for all of us biological descendants of the origin of life. The question, for now, remains open for computers, machines, or Chinese Rooms.

So the Chinese Room isn't irrelevant, and we don't care if it's practically impossible. This forces us to move on and consider the next standard criticism—the "Systems Reply." This reply concedes that the man in the room doesn't understand Chinese, but the output of the room as a whole reflects an understanding of Chinese so you could think of this room as its own system. As Baggini pointed out in his discussion of this, the Systems Reply "isn't quite as crazy as it sounds. After all, I understand English, but I'm not sure it makes sense to say that my neurons, tongue, or ears understand English. But the booth, John, and the computer do not form the same kind of closely integrated whole as a person, and so the idea that by putting the three together you get understanding seems unpersuasive." This line of thinking agrees with Searle, who argued that no reasonable person should be satisfied with the Systems Reply without making an effort to explain how this pile of objects has become a conscious, thinking being.

From an evolutionary philosophy perspective, this criticism of the "Systems Reply" also chimes with what theoretical evolutionary biologists John Maynard Smith and Eors Szathmary said in The Origins of Life in their analysis of ecosystems:

"Consider a present-day ecosystem—for example, a forest or a lake. The individual organisms of each species are replicators; each reproduces its kind. There are interactions between individuals, both within and between species, affecting their chances of survival and reproduction. There is a massive amount of information in the system, but it is information specific to individuals. There is no additional information concerned with regulating the system as a whole. It is therefore misleading to think of an ecosystem as a super-organism." (My emphasis added.)

However, to those who are happy with fuzzy, woo-woo, expanded-consciousness thinking, or even those using a more rational extended phenotype analysis, this could be seen to be a question of perspective. In his forthcoming book I Contain Multitudes, "Ed Yong explains that we have been looking at life on the wrong level of scale. Animals—including human beings—are not discrete individuals, but colonies. We are superorganisms." So, given small or large enough analyses of space and time, our own understanding as an "individual" could be just as unpersuasive as the systems theory that tries to attribute understanding to the "booth-John-computer" system.

This Systems Reply shows, then, how the Chinese Room raises interesting questions about what exactly an individual is and how this requires one to state their subjective position on what perspective you are taking, but the Systems Reply doesn't resolve anything about functionalism or Strong AI. The man in the Chinese room is a minor element in the system—he is basically just the movable arm storing or fetching data from a spinning platter in an old hard drive—so whether or not he understands Chinese is of no consequence. He is not analogous to an entire computer system.

That objection may cripple the Chinese Room as it stands, but it is still worth going on to the third standard reply, which concedes that just running an "if-this-then-respond-with-that" program doesn't lead to understanding, but that's not what Strong AI is really about. Replies along this line offer variations to the Chinese Room that hope to show a computer system could understand. "The Robot Reply" (where a robot body in the Chinese room has sensors to interact with the world) and "The Brain Simulator Reply" (where a neuron-by-neuron simulation of the entire brain is used in the Chinese room) are the most well known variations for this direction. Searle's response to these is always the same: "no matter how much knowledge is written into the program and no matter how the program is connected to the world, it is still in the room manipulating symbols according to rules. Its actions are syntactic and this can never explain what the symbols stand for. Syntax is insufficient for semantics."

Now we're talking about the difference between hardware and software as to whether or not Strong AI is possible. Searle argues that all the hardware improvements in the Robot Reply and the Brain Simulator Reply still don't lead to understanding as long as the software running them is based on syntax.

But What about improvements to the software? Dan Dennett agrees that such software "would have to be as complex and as interconnected as the human brain. The commonsense knowledge reply emphasizes that any program that passed a Turing test would have to be "an extraordinarily supple, sophisticated, and multilayered system, brimming with 'world knowledge' and meta-knowledge and meta-meta-knowledge."

We are getting closer to having such software for our computers. Apple says Siri "understands what you say. It knows what you mean. IBM is quick to claim its much larger ‘Watson’ system is superior in language abilities to Siri. In 2011 Watson beat human champions on the television game show Jeopardy, a feat that relies heavily on language abilities and inference. IBM goes on to claim that what distinguishes Watson is that it 'knows what it knows, and knows what it does not know.' This appears to be claiming a form of reflexive self-awareness or consciousness for the Watson computer system." These are little more than marketing people misrepresenting what is going on beneath the hood of these advanced search algorithms though.

A far more interesting step towards Strong AI was shown in Fei-Fei Li's TED talk from 2015, titled: "How we’re teaching computers to understand pictures." I've embedded the full 18-minute video below and strongly recommend it for viewing, but here are some relevant quotes to give you a quick synopsis:

"To listen is not the same as to hear. To take pictures is not the same as to see. By seeing, we really mean understanding. No one tells a child how to see. They learn this through real world examples. The eyes capture something like 60 frames per second, so by age of three they would have seen hundreds of millions of pictures of the real world. We used the internet to give our computers this quality and quantity of experience. Now that we have the data to nourish our computer's brains we went back to work on our machine learning algorithm, which was modeled on Convolutional Neural Networks. This is just like the brain with neuron nodes, each one taking input from other nodes and passing output to other nodes, and all of it organized in hierarchical layers. We have 24 million nodes with 150 billion connections. And it blossomed in a way no one expected. At first, it just learned to recognize objects, like a child who knows a few nouns. Soon, children develop to speak in sentences. And we've done that with computers now too. Although it makes mistakes, because it hasn't seen enough, and we haven't taught it art 101, or how to appreciate natural beauty, it will learn."

In the end, Searle agrees that this is possible. Hooray! Sort of. He allows that in the future, better technology may yield computers that understand. After all, he writes, "we are precisely such machines." However, while granting this physicalist view of the mind-body problem, Searle holds that "the brain gives rise to consciousness and understanding using machinery that is non-computational. If neuroscience is able to isolate the mechanical process that gives rise to consciousness, then it may be possible to create machines that have consciousness and understanding." Without the specific machinery required though, Searle does not believe that consciousness can occur.

Is this really a valid obstruction in the path of Strong AI? To understand that, we have to clear up what Searle means when he says our conscious brains are "non-computational." In theoretical computer science, a computational problem is "a mathematical object representing a collection of questions that computers might be able to solve. It can be viewed as an infinite collection of instances together with a solution for every instance." In other, clearer words from a blogger: "By non-computational I mean something which cannot be achieved in a series of computational steps, or to put it in the language of computer science, something for which no algorithm can be written down. Now what are such aspects which cannot be algorithmically achieved? The answer is any such thought processes which advances in leaps and bounds instead of in a series of sequential steps."

Is there really any such thing? I say there is not. We may imagine that having a "eureka moment" or having something come to us "out of the blue" means that those results didn't have an obvious cause, but that's merely because we don't (or can't) monitor or interrogate our subconscious brain processes thoroughly enough. In the rational universe we live in though, where all effects have causes, there is theoretically nothing that is non-computational.

Now, that doesn't mean we are deterministic or that everything is actually solvable. Just because we can look backwards and see the causes to our actions does not mean we can calculate all current influences and precisely determine future actions. We will never have enough information to do that for all actions. Because of the chaotic complexity of the present, as well as the unknowableness of what the future will discover, our knowledge is always probabilistic. (Just to provide an example, we may all be 99.9999% sure the Earth goes around the Sun, but maybe someday in the future it will be revealed to us that we are actually all in a computer simulation that only makes it appear that way.) So when we are trying to determine the best ways to act to achieve our goals, we are essentially probability calculation machines, since it is probability that provides a way to cope with uncertainty or incomplete information.

Most of our everyday decisions have great certainty to them. I'm very sure that eating breakfast will sustain me to lunch, turning that faucet will give me water for showering, and walking that direction will take me to a store that's open and has what I need. But occasionally we are faced with a choice that it would be impractical or impossible to research and calculate a result that would gives us a highly confident decision. Should I turn left or right to find something interesting in this market? Should I order the risotto or the tart for lunch? Should I hire this highly qualified candidate for the job or that one? Should we allow these chemicals into the food chain based on limited, short-term trials that seem to indicate they are okay? What do we do in these situations?

Depending on the importance and potential consequences of the decision, we have all sorts of ways to get over this indecision—recency bias, kin preference, George Costanza's opposite day heuristic, biological tendencies towards optimistic or pessimistic outlooks, contrarianism to social observation, or yielding to outside sources such as to people with stronger feelings or even to the flipping of a coin.

Computer scientists have begun to model this probability into their algorithms—that's really how IBM's Watson 'knows what it knows, and knows what it does not know'. Watson buzzes in when it calculates that the probability of getting the Jeopardy question right is over a certain threshold, based on how many perfect matches or corroborating sources it has found. What I haven't seen, however, is anyone trying to model the effect that emotion has on our own decision-making process. When we are happy and joyful, we try to continue doing what we are doing. When we are frustrated and angry, we try something different, and quickly. When we are sad and depressed, we take stock more slowly before deciding what to do differently. This is a problem, since, as David Hume said, reason is the slave of the passions. It's one of three fundamental ingredients I see that are missing from Strong AI efforts.

This missing ingredient is not surprising since emotions have never been the strong suit of philosophers or computer scientists. Emotions have just seemed messy and mysterious. But since the 1950's, cognitive psychologists have been building an Appraisal Theory for emotions, which hypothesizes a logical link between our subjective appraisals of a situation and the resulting emotions. As I wrote in my post on emotions:

---------------------------------------------

An influential theory of emotion is that of Lazarus: emotion is a disturbance that occurs in the following order: 1) cognitive appraisal - the individual assesses the event cognitively, which cues the emotion; 2) physiological changes - the cognitive reaction starts biological changes such as increased heart rate or pituitary adrenal response; 3) action - the individual feels the emotion and chooses how to react. Lazarus stressed that the quality and intensity of emotions are controlled through cognitive processes.

Now, what kind of cognitive assessments can you make? Using our logical system of finding a MECE (mutually exclusive, collectively exhaustive) framework to analyze your assessments, we can come up with something new and unique to understand our emotions. Specifically, I say:

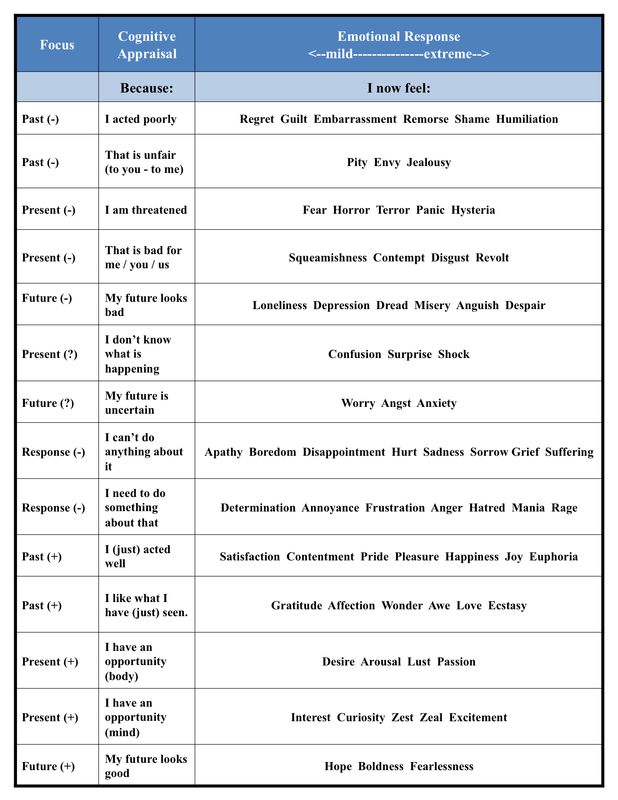

No definitive emotion classification system exists, though numerous taxonomies have been proposed. I propose the following system. Given that emotions are responses to cognitive appraisals, they can be classified according to what we are appraising. In total, we can think about the past, present, or future, and we can judge events to be good or bad, or we can be unsure about them. We can also appraise our options for what to do about negative feelings (positive emotions need no immediate correction). Finally, our emotional responses can range from mild to extreme. A list of emotions might therefore be understood through the following table.

Using this chart of emotions would allow our computer algorithms to get closer to performing with the kind of intelligence we believe that we humans have. We don't recognise cold, hard logic as intelligent because it is insufficient to deal with common scenarios characterised by uncertainty and a lack of evidence. A well calibrated emotional response could drive much more intelligent responses to these situations, however.

But in order to calibrate appropriately, we must be able to appraise something as "good" or "bad", which means we must have definitions for those terms. For the specific tasks that computers have so far been used, we use an instrumentalist definition of something being good or bad "for this particular task." If we want to dig a hole, a shovel is good while a saw is bad. If we want to cut a piece of wood, the opposite is true. If we want to maximise airline profits, a seat price maximisation algorithm is good while a fixed price algorithm is bad. Those specific definitions make sense for those specific tasks. For Strong AI, however, we must be able to generalise "good" and "bad" in some meaningful way that we all recognise and perhaps already adhere to. This is the second missing ingredient for Strong AI.

As I pointed out in my published paper on morality, all of this is given meaning by the desire for survival. We get from what is in the world, to how we ought to act in the world, by acknowledging our want to survive. Specifically, we ought to be guided by the fundamental want we all logically must have for life in general to survive over evolutionary spans of time.* All of the rest of our emotions should then be driven by the appraisals of whether a situation or action contributes towards that goal (good) or away from it (bad). This is the way meaning could be given to emotional responses that would allow Strong AI software to intelligently navigate our probabilistic universe.

(* As a small side note: this is why we are afraid of sci-fi robots. They do not have this root emotion built into them through a biological connection. We fear they would be immoral because they have no evolved regard for biological life. For Strong AI to be accepted, we must program this desire for life to survive into any Strong AI program. Or build it in directly through the use of biological materials if possible.)

So Strong AI seems very difficult, but it is likely possible. What about the other point of this thought experiment then? What about functionalism?

This brings us to the final overarching argument against the conclusions Searle draws from his Chinese Room. All along, Searle has been saying that one cannot get semantics (that is, meaning) from syntactic symbol manipulation. But some disagree with this. As many of Searle's critics have noted, "a computer running a program is not the same as 'syntax alone'. A computer is an enormously complex electronic causal system. State changes in the system are physical. One can interpret the physical states, e.g. voltages, as syntactic 1's and 0's."

Is this really any different than what is going on in our own brains? With this question, we are really treading on what it means to have an identity and to be conscious. Non-dualists, determinists, and Buddhists alike maintain there is no "I" residing behind the brain, no immaterial "me" observing it all from somewhere else out there. As we saw in My Response to Thought Experiment 38: I Am a Brain, David Hume and Derek Parfit describe identity using bundle theory, saying that we are the sum of our parts and nothing else. Take them away one by one and eventually, nothing is left. If that's true for us, the same would be true for a Strong AI computer. As the SEP article concludes, "AI programmers face many tough problems, but one can hold that they do not have to get semantics from syntax. If they are to get semantics, they must get it from causality." To clarify that, I said at the top of this essay that semantics for a language is concerned with what syntactical symbols and words actually mean to the human mind in relation to reality. This SEP quote says that as long as changes in observed reality cause changes to the semantic symbols, then this is understanding that is no different than our own.

I touched on this in My Response to Thought Experiment 32: Free Simone, where I said:

The third missing ingredient for Strong AI.

The third missing ingredient for Strong AI. We don't know and understand all of the parts of our consciousness yet, so we don't know exactly how to model them strongly enough to create Strong AI. But after my MECE attempt to Know Thyself, I feel that we are close enough to probably achieve something convincing if we put everything we know together.