As before in this mini-series on epistemology, I'm not going to provide a formal review of this book. I'll just share some selected excerpts that I jotted down and insert a few of my own thoughts and interpretations where necessary. All page numbers are from the 2021 proof edition from MIT Press.

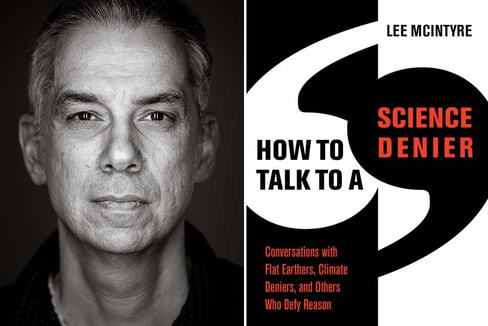

How to Talk to a Science Denier by Lee McIntyre

- (TOC) Introduction; What I Learned at the Flat Earth Convention; What Is Science Denial?; How Do You Change Someone's Mind?; Close Encounters with Climate Change; Canary in the Coal Mine; GMOs: Is There Such a Thing as Liberal Science Denial?; Talking with Trust; Coronavirus and the Road Ahead; Epilogue

This Table of Contents shows you what topics are covered in this book. I don’t know about you, but I got very excited reading this.

- (p.xii) In June 2019, a landmark study was published in the journal Nature Human Behaviour that provided the first empirical evidence that you can fight back against science deniers. … two German researchers—Philipp Schmid and Cornelia Betsch—show that the worst thing you can do is not fight back, because then misinformation festers. The study considered two possible strategies. First, there is content rebuttal, which is when an expert presents deniers with the facts of science. Offered the right way, this can be very effective. But there is a lesser-known second strategy called technique rebuttal, which relies on the idea that there are five common reasoning errors made by all science deniers. And here is the shocking thing: both strategies are equally effective, and there is no additive effect, which means that anyone can fight back against science deniers! You don’t have to be a scientist to do it. Once you have studied the mistakes that are common to their arguments--reliance on conspiracy theories, cherry-picking evidence, reliance on fake experts, setting impossible expectations for science, and using illogical reasoning—you have the secret decoder ring that will provide a universal strategy for fighting back against all forms of science denial.

This is the core idea of the book. If you pay any attention at all to claims from science deniers, you'll see these five mistakes pop up over and over. And it seems possible to make progress against poor arguments by simply pointing these issues out to people. You don’t need to be an expert in epidemiology or voting booth technology or earth sciences. But no matter what, you should continue to talk to people.

- (p.xiv) In his important essay “How to Convince Someone When Facts Fail,” professional skeptic and historian of science Michael Shermer recommends the following strategy: From my experience, (1) keep emotions out of the exchange, (2) discuss, don’t attack (no ad hominem or ad Hitlerum), (3) listen carefully and try to articulate the other position accurately, (4) show respect, (5) acknowledge that you understand why someone might hold that opinion, and (6) try to show how changing facts does not necessarily mean changing worldviews.

And when you do engage with people, these top tips can help keep it civil.

- (p.xv) In my most recent book, The Scientific Attitude: Defending Science from Denial, Fraud, and Pseudoscience (MIT Press, 2019), I developed a theory of what is most special about science, and outlined a strategy for using this to defend science from its critics. In my view, the most special thing about science is not its logic or method but its values and practices—which are most relevant to its social context. In short, scientists keep one another honest by constantly checking their colleagues’ work against the evidence and changing their minds as new evidence comes to light.

- (p.9) In my earlier book, The Scientific Attitude, I had argued that the primary thing that separates science from nonscience is that scientists embrace an attitude of willingness to change their hypothesis if it does not fit with the evidence.

These are great points that fit right in with my review of Why Trust Science? by Naomi Oreskes. It’s important to remember that epistemology is a normative discipline, meaning it is concerned with the norms of behaviors that we find acceptable and useful for producing knowledge. As McIntyre notes, the values and practices of truth-seeking and fallibilism are core aspects of the scientific method, and I would extend those as necessary for all epistemological efforts.

- (p.13) Conspiracy-based reasoning is—or should be—anathema to scientific practice. Why? Because it allows you to accept both confirmation and failure as warrant for your theory. If your theory is borne out by the evidence, then fine. But if it is not, then it must be due to some malicious person who is hiding the truth. And the fact that there is no evidence that this is happening is simply testament to how good the conspirators are, which also confirms your hypothesis.

Bingo. McIntyre does a great job of pinpointing why conspiracy thinking leads to a bad place where beliefs get stuck and become immune to change.

- (p.17) “What evidence, if it existed, would it take to convince you that you were wrong?” I liked this question because it was both philosophically respectable and also personal. It was not just about their beliefs but about them. … Instead of challenging them on the basis of their evidence, I would instead talk about the way that they were forming their beliefs on the basis of this evidence.

This is another key point of HTTTASD. This question is an excellent way in to the mind of science deniers. It's also the kind of question that can slowly eat away at others long after your personal interaction with them.

- (p.28) We used to laugh at anti-evolutionists too. How many years before Flat Earthers are running for a seat on your local school board, with an agenda to “teach the controversy” in the physics classroom? If you think that can’t happen—that it couldn’t possibly get that bad—consider this: eleven million people in Brazil believe in Flat Earth; that is 7 percent of their population.

Gah! Watch out for bad thinkers subverting democratic institutions.

- (p.39) Why do some people (like science deniers) engage in conspiracy theory thinking while others do not? Various psychological theories have been offered, involving factors such as inflated self-confidence, narcissism, or low self-esteem. A more popular consensus seems to be that conspiracy theories are a coping mechanism that some people use to deal with feelings of anxiety and loss of control in the face of large, upsetting events. The human brain does not like random events, because we cannot learn from and therefore cannot plan for them. When we feel helpless (due to lack of understanding, the scale of an event, its personal impact on us, or our social position), we may feel drawn to explanations that identify an enemy we can confront. This is not a rational process, and researchers who have studied conspiracy theories note that those who tend to “go with their gut” are the most likely to indulge in conspiracy-based thinking. This is why ignorance is highly correlated with belief in conspiracy theories. When we are less able to understand something on the basis of our analytical faculties, we may feel more threatened by it. There is also the fact that many are attracted to the idea of “hidden knowledge,” because it serves their ego to think that they are one of the few people to understand something that others don’t know.

This is an aside from the points about epistemology that I am focused on at the moment, but understanding the psychology behind the bad beliefs does help me sympathise a bit more with the people who hold them. And that can give me more patience too.

- (p.42) There are myriad ways to be illogical. The main foibles and fallacies identified by the Hoofnagle brothers and others as most basic to science denial reasoning include the following: straw man, red herring, false analogy, false dichotomy, and jumping to a conclusion.

That's another good checklist for noting the errors that people make.

- (p.48) When I was at FEIC 2018, I noted a disproportionate number of people who had had some sort of trauma in their lives. Sometimes this was health-related, other times it was interpersonal. Often it was unspecified. But in every instance the Flat Earther referred to it as in some way related to how they “woke up” and realized that they were being lied to. Many of them embraced a sense of victimization, even before they became Flat Earthers. I have found very little in the psychological literature about this, but I remain convinced that there is something to learn from this hypothesis. I came away from the convention with the feeling that many of the Flat Earthers were broken people. Could that be true for other science deniers as well?

Maybe so! One of the big takeaways from Why Trust Science? was that scientific communities are aiming for broad consensus — broad across all kinds of diversity and all manner of investigations — and this requires good faith efforts and trust in one another. It makes a lot of sense, therefore, that once someone loses faith and trust in others as a result of a personal trauma, then they could easily lose their ability to join in with consensus beliefs too. If so, that is doubly damaging.

- (p.49) We now stand on the doorstep of a key insight into the question of why science deniers believe what they believe, even in the face of contravening evidence. The answer is found in realizing that the central issue at play in belief formation—even about empirical topics—may not be evidence but identity.

This is another key takeaway from HTTTASD. And it makes complete sense in light of the discussion above about knowledge building towards consensus rather than truth. We only recognise the good faith efforts of people who we trust to be in our in-groups. That identity can be quite flexible and broad enough to include “anyone trying to tell the truth,” or it can be so rigid and narrow as to only include “those who see the world as I do.” Obviously, the former leads to better outcomes, so be careful who you identify with.

- (p.54) Once you decide who to believe, perhaps you know what to believe. But this makes us ripe for manipulation and exploitation by others. Perhaps this provides the long-awaited link between those who create the disinformation of science denial and those who merely believe it.

Yes! And if you remember from my overview of Kindly Inquisitors, two foundation stones for the liberal intellectual system are “no one gets final say” and “no one has personal authority.” Once you commit to these, you join a team that is far more protected from disinformation. Fake news fizzles out here very quickly after a few checks and balances by your other teammates. If, however, you join a tribe that forms around revealed truths from authority figures, then you become much more susceptible to disinformation. This has got to be a major reason why conservatives retweeted Russian trolls about 31 times more often than liberals in the 2016 election. (Other possible reasons do exist for this too.)

- (p.56) Science denial is an attack not just on the content of certain scientific theories but on the values and methods that scientists use to come up with those theories in the first place. In some sense, science deniers are challenging the scientist’s identity! Science deniers are not just ignorant of the facts but also of the scientific way of thinking. To remedy this, we must do more than present deniers with the evidence; we must get them to rethink how they are reasoning about the evidence. We must invite them to try out a new identity, based on a different set of values.

This is a brilliant point from McIntyre. We need to be much more explicit about the epistemological values and methods we are using. We have to be clear that anyone can join in with them, and this is precisely why they work. Just shouting “trust the science” isn’t going to work when “science” is such an underdefined term for the general public. (And that includes too many scientists doing the loud shouting too.)

- (p.68) Schmid and Betsch tested four possible ways of responding to subjects who had been exposed to scientific misinformation: no response, topic rebuttal, technique rebuttal, and both kinds of rebuttal. … The clear result of this study was that providing no response to misinformation was the worst thing you could do; with no rebuttal message, subjects were more likely to be swayed toward false beliefs. In a more encouraging result, researchers found that it was possible to mitigate the effects of scientific misinformation by using either content rebuttal or technique rebuttal, and that both were equally effective. There was, moreover, no additive advantage; when both content and technique rebuttal were used together, the result was the same.

What a fascinating study. Good to know.

- (p.119) According to one recent study in the Journal of Experimental Social Psychology, entitled “Red, White, and Blue Enough to be Green,” the persuasive strategy of “moral framing” can make a big difference in making the issue of climate change more palatable to conservatives. By emphasizing the idea that protecting the natural environment was a matter of (1) obeying authority, (2) defending the purity of nature, and (3) demonstrating one’s patriotism, there was a statistically significant shift in conservatives’ willingness to accept a pro-environmental message.

And that is a good data point about this strategy in action.

- (p.175) My message in this book is simple: we need to start talking to one another again, especially to those with whom we disagree. But we have to be smart about how we do it.

- (p.176) Those who are cognizant of the way science works understand that there is always some uncertainty behind any scientific pronouncement, and in fact the hallmark of science is that it cares about evidence and learns over time, which can lead to radical overthrow of one theory for another. But does the public understand this? Not necessarily. And lying to someone—for instance, by saying that masks are 100 percent effective, or that any vaccine is guaranteed to be safe—is exactly the wrong tactic. When scientists do that, any chink in the armor is ripe for later exploitation, and deniers will use it as an excuse not to believe anything further.

- (p.177) I have long held that one of the greatest weapons we have to fight back against science denial is to embrace uncertainty as a strength rather than a weakness of science.

Yes! And this is exactly why I explicitly want to remove the claims for Truth from the JTB theory of knowledge. Embrace our uncertainty. That’s how we remain flexible in our thinking and begin to pay attention to what it really takes to builds up confidence.

- (p.180) What if we taught people not just what scientists had found, but the process of conjecture, failure, uncertainty, and testing by which they had found it? Of course scientists make mistakes, but what is special about them is that they embrace an ethos that champions turning to the evidence as a way to learn from them. What if we educated people about the values of science by demonstrating the importance of the scientist’s creed: openness, humility, respect for uncertainty, honesty, transparency, and the courage to expose one’s work to rigorous testing? I believe this kind of science education would do more to defeat science denial than anything else we could do.

Agreed. I really enjoyed the main points that McIntyre drives home in HTTTASD. And there are numerous examples in the book (which I’ve left out of this short blog post) that are absolutely worth the price of admission. I especially enjoyed his stories about attending a Flat Earth convention. Amazing. If you’ve got any science deniers in your life, I highly recommend picking up a copy of McIntyre’s book to help you deal with them. Maybe we’ll all have a better 2022 because of it.