- My background is in physics and philosophy. I worked with Francis Crick after his Nobel Prize. We looked for “the neural correlates of consciousness,” i.e. what are the minimal physical / biophysical neuronal mechanisms that are jointly necessary for any one conscious perception? What is necessary for me to “hear” that voice inside my head? Not necessarily to sense it, or process it, but to have that experience.

- We now know it’s really the cortex—the outer-most shell of the brain, size and thickness of a pizza, highly convoluted, left and right hemispheres, the most complex and highly organized piece of matter in the known universe—which gives rise to consciousness.

- This study of the neural correlates of consciousness is fantastic. For example, whenever you activate such and such neurons, you see your mom’s face or hear her voice. And if you artificially stimulate them, you will also have some vague feeling of these things. There is no doubt that scientists have established this close one-to-one relationship between a particular experience and a particular part of the brain.

- Correlates don’t, however, answer why we have this experience. Or how. Or whether something like a bee can be conscious. For mammals it's easy to see the similarity to ourselves. But what about the further away you go? Or what about artificial intelligence? Or how low does it go? Panpsychism has said it is everywhere. Maybe it is a fundamental part of the universe.

- To answer these questions, we need a fundamental theory of consciousness.

- I’ve been working on this theory with Giulio Tononi, which is called the Integrated Information Theory.

- IIT goes back to Aristotle and Plato. In science, something exists to the extent that it exerts causal power over other things. Gravity exists because it exerts power over mass. Electricity exists because it exerts power over charged particles. I exist because I can push a book around. If there is no causal power over anything in the universe, why postulate they exist

- IIT says fundamentally what consciousness is, is the ability of any physical system to exert causal power over itself. This is an Aristotelian notion of causality. The present state of my brain can determine one of the trillion future states of my brain. One of the trillion past states of my brain can have determined my current state so it has causal power. The more power the past can exert over the present and future, the more conscious the thing that we are talking about is.

- In principle, you can measure this system. The exact causal power, a number we call phi, is a measure of how much things exist for themselves, and not for others. My consciousness exists for itself; it doesn’t depend on you, it doesn’t depend on my parents, it doesn’t depend on anybody else but me.

- Phi characterizes the degree to which a system exists for itself. If it is zero, the system doesn’t exist. The bigger the number, the more the system exists for itself and is conscious in this sense. Also the type and quality of this conscious experience (e.g. red feels different from blue) is determined by the extent and the quality of the causal power that the system has upon itself.

- Look for the structure within the brain, or the CPU, that has the maximal causal power, and that is the structure that ultimately constitutes the physical basis of consciousness for that particular creature.

- How does this relate to panpsychism? They share some intuitions, but also differ. One of the great philosophical problems with panpsychism is the superposition problem. I’m conscious. You are conscious. Panpsychism says there should be an uber-consciousness that is you and me. But neither of us have any experience of that. Also, every particle of my body has its own consciousness, and there is the consciousness of me and the microphone, or my wife and whatever, or even me and America. But there isn’t anything of what it feels like to be America. This is the big weakness of panpsychism.

- IIT solves the superposition problem by saying only the maximum of this measure of IIT exists. Locally, there is a maximum within my brain or your brain. But the amount of causal interaction between me and you is minute compared to the massive causality within. Therefore, there is you and there is me.

- If we ran wires between two mice or two humans, IIT predicts some things. For example, between my left and right hemispheres there are connections called the corpus callosum. If you cut them, you get split brain syndrome—two conscious entities. If you could do the opposite, you would build an artificial corpus callosum between my brain and your brain. If you added just a few, I would slowly start to see some things that you see, but there would be no confusion as to who is who. As more wires are added, though, IIT says there is a precise point in time when the phi across this system will exceed the information within either single brain, and at that point, the individuals will disappear and the new conscious entity will arise.

- What is right about this as opposed to the Global Neuronal Workspace Theory or other approaches? GNWT only claims to talk about those aspects of consciousness that you can actually speak about. This is called "access consciousness." Once information reaches the level of consciousness, all areas of the brain can use it. If it remains non-conscious, only certain parts of the brain use it.

- There is an "adversarial collaboration" just beginning where IIT and GNWT proponents have agreed on a large set of experiments to see which theory is supported by fMRI, EEG, subjective reporting, etc. In principle this will be great, but practically, we will see.

- Where the theories really disagree is the fundamental nature of consciousness. GNWT embodies the dominant zeitgeist (Anglo-Saxon philosophy, scientists, Silicon Valley, sci-fi, etc), which says if you build enough intelligence into a machine, if you add feedback, self-monitoring, speaking, etc, sooner or later you will get to a system that is not only intelligent, but also conscious. Ultimately, consciousness is all about behavior. It’s a descendent of behaviorism saying behavior is all we can talk about.

- The other view says no, consciousness is not magical, it’s a natural property of certain systems, but it’s about causal power. To the extent you can build something with causal power, that will be conscious, but you cannot simulate it. E.g. weather simulations don’t cause your computer to get wet. The same thing holds for perfect simulations of the human brain. The simulation will say it is conscious, but it will all be a deep behavioral fake. What you have to do is build a computer in the image of a brain with massive overlapping connectivity and inputs. In principle, this could give rise to consciousness.

- Could a single cell or an atom be conscious? In the limit, it may well feel like something to be a bacterium. It doesn’t have a psychology, feel fragile, or hungry, etc. But there are already a few billion molecules and a few thousand proteins. We haven’t yet modeled this, but yes, most biological systems may feel like something.

- Has any consciousness of my mitochondria been subsumed into my own? Yes. On its own, mitochondria has phi, but IIT says that once it is put together with something else, that consciousness dissolves. If your brain is disassembled, for example when you die, there may be a few fleeting moments where each part again feels like something. In each case you have to ask what is the system that maximizes the integrated information. Only that system exists for itself, is a subject, and has some experience. The other pieces can be poked and studied, but they aren’t conscious.

- The zap and zip technique is being used to look for consciousness in patients who may be locked in or anesthetized irregularly. You zap the brain, like striking a bell, and look at the amount of information that reverberates around the brain. A highly compressed response, one that is “zipped up” so there is almost no information response, is more unconscious (or even dead if there is no response) than one where much response around the brain is noted. This is progress in the mind-body problem. (Note, you don’t have to believe in IIT or GNWT to use this.)

- Right now, we don’t have strong experimental evidence to think that quantum physics has anything to do with the function of brain systems. Classical physics is enough to model everything so far, but you still have to keep an open mind since we don’t understand all causations.

Brief Comments

Although I found this interview to be a good overview, it still left me with a lot of questions about IIT. So, before I make any comments, I want to share a bit more research that I found helpful.

From the Wikipedia Entry on Integrated Information Theory:

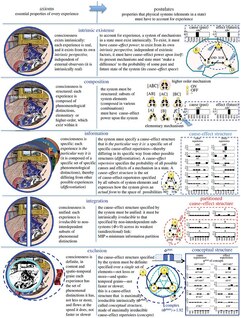

- If we are ever going to make the link between the subjective experience of consciousness and the physical mechanisms that cause it, IIT assumes the properties of the physical system must be constrained by the properties of the experience.

- Therefore, IIT starts by attempting to identify the essential properties of conscious experience (called "axioms"), and then moves on to the essential properties of the physical systems underneath that consciousness (called "postulates").

- Every axiom should apply to every possible experience. The most recent version of these axioms states that consciousness has: 1) intrinsic existence, 2) composition, 3) information, 4) integration, and 5) exclusion. These are defined below.

- 1) Intrinsic existence — By this, IIT means that consciousness exists. Indeed, IIT claims it is the only fact I can be sure of immediately and absolutely, and this experience exists independently of external observers.

- 2) Composition — Consciousness is structured. Each experience has multiple distinctions, both elementary and higher-order. For example, within one experience I may distinguish a book, a blue color, a blue book, the left side, a blue book on the left, and so on.

- 3) Information — Consciousness is specific. Each experience is the particular way that it is because it is composed of a specific set of possible experiences. The experience differs from a large number of alternative experiences I could have had but am not actually having.

- 4) Integration — Consciousness is unified. Each experience is irreducible and cannot be subdivided. I experience a whole visual scene, not the left side of the visual field independent of the right side (and vice versa). Seeing a blue book is not reducible to seeing a book without the color blue, or the color blue without the book.

- 5) Exclusion — Consciousness is definite. Each experience is what it is, neither less nor more, and it flows at the speed it flows, neither faster nor slower. For example, the experience I am having is of seeing a body on a bed in a bedroom, a bookcase with books, one of which is a blue book. I am not having an experience with less content (say, one lacking color), or with more content (say, with the addition of feeling blood pressure).

- These axioms describe regularities in conscious experience, and IIT seeks to explain these regularities. What could account for the fact that every experience exists, is structured, is differentiated, is unified, and is definite? IIT argues that the existence of an underlying causal system with these same properties offers the most parsimonious explanation. The properties required of a conscious physical substrate are called the "postulates" because the existence of the physical substrate is itself only postulated. (Remember, IIT maintains that the only thing one can be sure of is the existence of one's own consciousness).

From two articles (1,2) about the "adversarial collaboration" between IIT and Global Workspace Theory (GWT):

- Both sides agree to make the fight as fair as possible: they’ll collaborate on the task design, pre-register their predictions on public ledgers, and if the data supports only one idea, the other acknowledges defeat.

- Rather than unearthing how the brain brings outside stimuli into attention, the fight focuses more on where and why consciousness emerges.

- The GWT describes an almost algorithmic view. Conscious behavior arises when we can integrate and segregate information from multiple input sources and combine it into a piece of data in a global workspace within the brain. According to Dehaene, brain imaging studies in humans suggest that the main “node” exists at the front of the brain, or the prefrontal cortex, which acts like a central processing unit in a computer.

- IIT, in contrast, takes a more globalist view where consciousness arises from the measurable, intrinsic interconnectedness of brain networks. Under the right architecture and connective features, consciousness emerges. IIT believes this emergent process happens at the back of the brain where neurons connect in a grid-like structure that hypothetically should be able to support this capacity.

- Koch notes, "People who have had a large fraction of the frontal lobe removed (as it used to happen in neurosurgical treatments of epilepsy) can seem remarkably normal." Tononi added, “I’m willing to bet that, by and large, the back is wired in the right way to have high Φ, and much of the front is not. We can compare the locations of brain activity in people who are conscious or have been rendered unconscious by anesthesia. If such tests were able to show that the back of the brain indeed had high Φ but was not associated with consciousness, then IIT would be very much in trouble.”

- Another prediction of GWT is that a characteristic electrical signal in the brain, arising about 300-400 milliseconds after a stimulus, should correspond to the “broadcasting” of the information that makes us consciously aware of it. Thereafter the signal quickly subsides. In IIT, the neural correlate of a conscious experience is instead predicted to persist continuously while the experience does. Tests of this distinction, Koch says, could involve volunteers looking at some stimulus like a scene on a screen for several seconds and seeing whether the neural correlate of the experience persists as long as it remains in the consciousness.

- It may also turn out that no scientific experiment can be the sole and final arbiter of a question like this one. Even if only neuroscientists adjudicated the question, the debate would be philosophical. When interpretation gets this tricky, it makes sense to open the conversation to philosophers.

Great! So let's get on with some philosophizing.

Right off the bat, the first axiom of IIT is problematic. It is trying to build upon the same bedrock that Descartes did. But that is an infamously circular argument that rested on first establishing that we are created by an all-perfect God rather than an evil demon. Descartes said this God wouldn't let him be deceived about seeing things "clearly and directly," which led to his claim that therefore, I am. Now, the first axiom of IIT claims consciousness is the only fact one can be sure of "immediately and absolutely." This is the same argument, and it still doesn't hold up. The study of illusions and drug-altered states of experience shows us that consciousness is not perceived immediately and absolutely. And as Keith Frankish pointed out in my post about illusionism, once that wedge of doubt is opened up, it cannot be closed.

Regardless, let's grant that the subjective experience each of us thinks we are perceiving does actually constitute a worthwhile data point. (Even if this isn't a certain truth, it's a pretty excellent hypothesis.) Talking to one another about all of our individual data points is how IIT comes up with its five axioms. But would it follow from that that ALL conscious experiences have the same five characteristics? No! That would be an enormous leap of induction from a specific set of human examples to a much wider universal rule.

However, despite the universal pretensions of IIT and its definition of phi that could theoretically (though not currently) be calculated for any physical system, when Koch is talking about consciousness, he occasionally is only referring to the very restricted human version of it that requires awareness and self-report. This makes him confusing at times, but that's certainly the consciousness he's talking about for the upcoming "adversarial collaboration" that will test predictions about consciousness by proponents of IIT and GWT. It's great to see such falsifiable predictions being made and tested, and of course the human report of consciousness is where we have to start our scientific studies of consciousness, but it's hard to see how these tests will actually end the debate any time soon. Why? Because as we have seen throughout this series, we just don't have a settled definition for the terms being used in this debate. One camp's proof of consciousness is another camp's proof of something else. They could all seemingly just respond to one another, "but that's not really consciousness."

So, What does IIT say consciousness really is? Koch reports:

>>> "IIT says fundamentally what consciousness is, is the ability of any physical system to exert causal power over itself."

I've heard Dan Dennett say that vigorous debates occur about whether tornadoes fit this kind of definition about consciousness. Their prior states influence their current and future states. That's a kind of causal power. They are also a physical system that acts as one thing even though none of the constituent parts act the way the system as a whole does. But does anyone really think a tornado is conscious? Koch continues:

>>> "My consciousness exists for itself; it doesn’t depend on you, it doesn’t depend on my parents, it doesn’t depend on anybody else but me."

This isn't strictly true, of course. Everything is interrelated. We have no evidence of any uncaused causes in this universe, so Koch's consciousness clearly depends on lots of outside factors. If I shouted that at him, would his consciousness be able to stop him from hearing it? I imagine that's not exactly what Koch meant, but between this and the similarity to Descartes' argument using God to see the world clearly and directly, IIT strikes me as practically a religious viewpoint. Tellingly enough, I found out that it is.

In an essay at Psychology Today titled, "Neuroscience's New Consciousness Theory Is Spiritual", there was this passage:

- Most rational thinkers will agree that the idea of a personal god who gets angry when we masturbate and routinely disrupts the laws of physics upon prayer is utterly ridiculous. Integrated Information Theory doesn't give credence to anything of the sort. It simply reveals an underlying harmony in nature, and a sweeping mental presence that isn't confined to biological systems. IIT's inevitable logical conclusions and philosophical implications are both elegant and precise. What it yields is a new kind of scientific spirituality that paints a picture of a soulful existence that even the most diehard materialist or devout atheist can unashamedly get behind.

I'll let the "inevitability" of IIT's logical conclusions slide for now, but is this "sweeping mental presence" just another form of idealism, which George Berkeley used to argue that the mind of God was everywhere and caused all things? It's not from the same source or for exactly the same reason, but it's related. As an essay at the Buddhist magazine Lion's Roar points out, "Leading neuroscientists and Buddhists agree: 'Consciousness is everywhere'." Here we find that:

- Buddhism associates mind with sentience. The late Traleg Kyabgon Rinpoche stated that while mind, along with all objects, is empty, unlike most objects, it is also luminous. In a similar vein, IIT says consciousness is an intrinsic quality of everything yet only appears significantly in certain conditions — like how everything has mass, but only large objects have noticeable gravity."

- In his major work, the Shobogenzo, Dogen, the founder of Soto Zen Buddhism, went so far as to say, “All is sentient being.” Grass, trees, land, sun, moon, and stars are all mind, wrote Dogen.

- Koch, who became interested in Buddhism in college, says that his personal worldview has come to overlap with the Buddhist teachings on non-self, impermanence, atheism, and panpsychism. His interest in Buddhism, he says, represents a significant shift from his Roman Catholic upbringing. When he started studying consciousness — working with Nobel Prize winner Francis Crick — Koch believed that the only explanation for experience would have to invoke God. But, instead of affirming religion, Koch and Crick together established consciousness as a respected branch of neuroscience and invited Buddhist teachers into the discussion.

- At Drepung Monastery, the Dalai Lama told Koch that the Buddha taught that sentience is everywhere at varying levels, and that humans should have compassion for all sentient beings. Until that point, Koch hadn’t appreciated the weight of his philosophy. "I was confronted with the Buddhist teaching that sentience is probably everywhere at varying levels, and that inspired me to take the consequences of this theory seriously," says Koch. "When I see insects in my home, I don't kill them."

These religious motivations don't necessarily mean that the motivated reasoning behind IIT is unsound. But it sure makes me skeptical. The cracks I see in IIT's logic—e.g. starting with seeing consciousness immediately and absolutely, making leaps from human experience to all experience, seeing islands of uncaused causes everywhere—are enough to give me pause. Despite all the fancy math plastered on top of these ideas, I'm still fundamentally unconvinced that consciousness is the integration of information, yet somehow "can't be computed and is the feeling of being alive." As for what I think consciousness really is, it's finally time for me to say. Hope I can get it down clearly!

What do you think? Is IIT flawed to you too? What useful concepts or calculations might it offer?

--------------------------------------------

Previous Posts in This Series:

Consciousness 1 — Introduction to the Series

Consciousness 2 — The Illusory Self and a Fundamental Mystery

Consciousness 3 — The Hard Problem

Consciousness 4 — Panpsychist Problems With Consciousness

Consciousness 5 — Is It Just An Illusion?

Consciousness 6 — Introducing an Evolutionary Perspective

Consciousness 7 — More On Evolution

Consciousness 8 — Neurophilosophy

Consciousness 9 — Global Neuronal Workspace Theory

Consciousness 10 — Mind + Self

Consciousness 11 — Neurobiological Naturalism

Consciousness 12 — The Deep History of Ourselves

Consciousness 13 — (Rethinking) The Attention Schema